Velger du riktig GPU for å kjøre store språkmodeller som GPT, Llama eller Mistral i produksjon? Det er ikke bare et spørsmål om hvor mye penger du har - det er om du klarer å levere svar raskt, stabil og uten å bruke hele budsjettet ditt på strøm og kjøling. I 2026 er valget mellom NVIDIA A100, H100 og CPU-offloading ikke lenger et teoretisk dilemma. Det er en praktisk beslutning som avgjør om din chatbot svarer i under ett sekund - eller lar brukerne sitte og vente som om de er på en 1990-talls dial-up-tilkobling.

Hvorfor GPU-velgelse er kritisk for LLM-inferens

Large Language Models - store språkmodeller - har blitt så store at de ikke lenger passer i minnet til vanlige servere. En modell som Llama 3.1 70B krever minst 80 GB minne bare for å lastes inn. Og det er før du begynner å svare på spørsmål. Hver gang en bruker skriver et spørsmål, må modellen gjøre millioner av beregninger. Og de må skje fort. Hvis det tar 3 sekunder å svare på et enkelt spørsmål, vil brukerne forlate deg. Så hvilken maskinvare gir deg raskeste svar for lavest pris?Det er her GPU-er kommer inn. De er ikke bare raskere enn CPU-er - de er ordre av størrelse raskere. Men ikke alle GPU-er er like. NVIDIA A100 og H100 er de to største spillerne. Og så er det CPU-offloading - en måte å prøve å kjøre store modeller uten en GPU. Men det er ikke noe mirakel.

A100: Den gamle stjernen som fortsatt fungerer

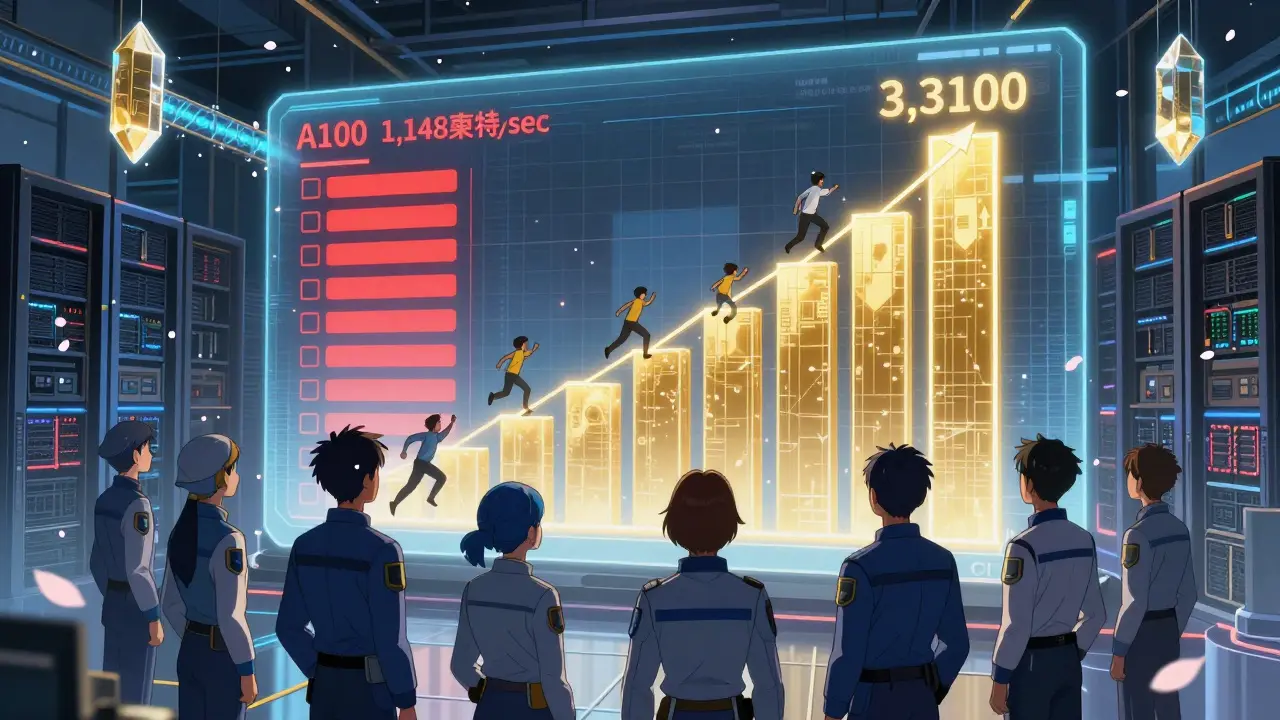

NVIDIA A100 kom ut i mai 2020. Det var en brytning i AI-maskinvare. Med 80 GB HBM2e-minne og 2,0 TB/s minnebåndbredde, kunne den kjøre modeller som tidligere bare fantes i forskningslaboratorier. I 2022 var den den mest brukte GPU-en for LLM-inferens i bedrifter. I dag er den ikke lenger toppen, men den er ikke utdatert.A100 har 6.912 CUDA-kerner og tredje generasjon Tensor Cores. Den klarer cirka 1.148 token per sekund når den kjører Llama 3.1 70B med vLLM. Det er raskt - men ikke raskt nok for høy volum. I en test av Hyperstack.cloud i april 2025, brukte A100 0,75 dollar per time. Det er billigere enn H100. Men du får mindre for pengene. Hver token du genererer koster mer enn på H100.

For modeller under 13 milliarder parametere - som Mistral 7B eller Phi-2 - er A100 fortsatt et godt valg. Den er lett å sette opp. Nesten alle verktøy som vLLM, TensorRT-LLM og DeepSpeed støtter den ut av boksen. Det tar 1-3 dager å få den til å fungere bra. Det er en stor fordel hvis du ikke har et stort teknisk team.

H100: Den nye standarden for produksjonsinferens

NVIDIA H100 kom ut i november 2022. Den var ikke bare en oppgradering - den var en revolusjon. Med HBM3-minne, 3,35 TB/s båndbredde og fjerde generasjon Tensor Cores, er den 2-3 ganger raskere enn A100 for LLM-inferens. Og det er bare starten.H100 har 14.592 CUDA-kerner - nesten dobbelt så mange som A100. Men det som virkelig skiller den ut, er Transformer Engine. Denne teknologien lar GPU-en dynamisk skifte mellom FP8, FP16 og INT8 presisjon under inferens. Det betyr at den kan bruke mindre minne og gjøre mer arbeid per syklus. For en 30B-modell gir dette en 3,3 ganger raskere inferens sammenlignet med A100.

En test med Llama 3.1 70B viste at H100 genererte 3.311 token per sekund - nesten tre ganger så mange som A100. Og selv om H100 koster 1,7 ganger mer per time, er den 18% billigere per token. Det betyr at hvis du har mange brukere, sparer du penger på lang sikt.

Det er også bedre for parallellitet. En bedrift i finanssektoren rapporterte at de kunne håndtere 37 samtidige brukere på en H100 - bare 22 på en A100 før svaret ble for treigt. Og med NVLink 2.0 på 900 GB/s, kan du koble flere H100-er sammen uten å tape hastighet. Det er kritisk hvis du kjører modeller som er større enn 80 GB - som nye 100B+ modeller.

H100 NVL-varianten har to GPU-er i ett kart og 188 GB HBM3-minne. Den er designet for de aller største modellene. Hvis du kjører Qwen-2 110B eller en modell med 200B parametere, er det nesten det eneste valget.

CPU-offloading: Når du ikke har råd til en GPU

Hvis du ikke kan betale for en H100 - eller ikke har tilgang til en - er CPU-offloading en alternativ vei. Denne metoden lar du CPU-en holde deler av modellen i RAM, mens GPU-en bare gjør de mest kritiske beregningene. Verktøy som vLLM med PagedAttention eller Hugging Face Accelerate gjør dette mulig.Det fungerer. Du kan kjøre Llama 3 8B på en datamaskin med 32 GB RAM. Det er imponerende. Men det er ikke for produksjon. MLPerf-benchmarkene fra desember 2024 viser at CPU-offloading øker latens med 3-10 ganger. På en H100 tar det 200-500 ms å generere et token. På CPU-offloading tar det 2-5 sekunder. Det er ikke bare treigt - det er uakseptabelt for en chatbot, en kundeservicebot eller en AI-assistent.

En undersøkelse av 142 tråder på Hugging Face-forumene i mai 2025 viste at 92% av brukerne som prøvde CPU-offloading med 7B-modeller rapporterte svartider på 8-15 sekunder. Det er ikke en feil. Det er en begrensning. CPU-er er ikke designet for de parallelle beregningene som LLM-er krever. Selv den beste server-CPU-en, som AMD EPYC 9654, klarer bare 1-5 token per sekund i slike scener.

Det er også vanskelig å sette opp. Utviklere på GitHub rapporterer at det tar 5-7 dager å få CPU-offloading til å fungere stabilt med en 70B-modell. Dokumentasjonen er dårlig. De fleste veiledningene er spesifikke for et bibliotek eller en Linux-versjon. Det er ikke som å sette opp en H100 - der har du NVIDIA sine egne veiledninger med 4,2/5-poeng i klarhet.

Det er en kompromiss. Og kompromiss er ikke det samme som løsning. CPU-offloading er bra for testing, utvikling, eller når du bare trenger å kjøre en modell én gang i døgnet. Ikke for å levere AI til tusen brukere.

Pris og tilgjengelighet i 2026

I 2025 skjedde noe viktig: prisen på H100 i skyen sank med 40%. Det betyr at den nå er mer økonomisk enn noen gang før. Gartner rapporterte i mai 2025 at 62% av nye LLM-inferens-deployments i bedrifter bruker H100. Bare 28% bruker A100. Og 10% bruker CPU-løsninger.H100 er nå den mest økonomiske valget for modeller over 13B parametere. Selv om den koster mer per time, genererer den flere token per dollar. Det er enklere å regne ut: hvis du tjener penger på hver bruker, så vil du tjene mer med H100.

A100 er fortsatt tilgjengelig - og billig. Men den blir raskere utdatert. Nye modeller med 100B+ parametere har ikke nok minnebåndbredde for A100. De vil knekke under trykket. H100 med HBM3 er den eneste som kan holde tritt med fremtiden.

Det er også nye konkurrenter. AMD MI300X er rask, men den klarer bare 1,7 ganger så mye som H100 - og koster 85% av prisen. Det er ikke nok. Google sine TPU v5p er raskere for noen modeller, men de støtter bare et fåtall rammeverk. Du må skrive om alt ditt kode for å bruke dem. Det er ikke realistisk for de fleste.

Hva bør du velge?

Her er en enkel veileder:- Velg H100 hvis: du kjører modeller over 13B parametere, du har mer enn 10 brukere samtidig, du trenger svar under 1 sekund, eller du planlegger å skale opp. Det er den eneste valget som gir deg fremtidsikring.

- Velg A100 hvis: du kjører små modeller (under 13B), du har begrenset budsjett, du ikke trenger høy parallellitet, og du vil ha en stabil, veltestet løsning. Den er god for testing, prototyping og lav volum.

- Unngå CPU-offloading for produksjon hvis: du trenger rask respons. Det er bare for utvikling, eksperimentering, eller når du ikke har tilgang til GPU-er. Ikke bruk det for kundeservice, apps eller offentlige API-er.

Det er enkelt. H100 er ikke bare raskere - den er smartere. Transformer Engine, HBM3, og NVLink 2.0 er ikke bare tall på et datasheet. De er faktiske forbedringer som gjør at modeller fungerer som de skal - raskt, stabilt og i skala.

En bedrift i San Francisco brukte A100 i 2024. I mars 2025 byttet de til H100. Deres brukerengasjement økte med 63%. Ikke fordi modellen ble bedre. Men fordi den svarte raskere. Brukere liker raskhet. Og raskhet koster ikke alltid mer - i 2026, er den ofte billigere.

Hva med fremtiden?

NVIDIA har allerede sluppet H100 Turbo - en versjon med bedre kjøling og 20% mer ytelse. Det viser at de ikke står stille. Samtidig forventes det at 75% av alle produksjons-LLM-inferens i 2027 vil kjøre på H100-klasse GPU-er, ifølge IDC.Det betyr at hvis du setter opp en ny infrastruktur i 2026, så er H100 den eneste valget som vil være relevant i 3-5 år. A100 vil bli en legacy-løsning. Og CPU-offloading? Det vil forbli et verktøy for utviklere som ikke har råd til å kjøre i skyen - ikke for bedrifter som vil vokse.

Det er ikke bare om hvilken GPU du velger. Det er om hvilken fremtid du bygger.

Er H100 verdt det ekstra beløpet i forhold til A100?

Ja, hvis du kjører modeller over 13 milliarder parametere eller har mer enn 10 samtidige brukere. Selv om H100 koster 1,7 ganger mer per time, genererer den nesten 3 ganger flere token. Det betyr at hver token du produserer koster 18% mindre. For bedrifter med høy volum, sparer du penger på lang sikt. For små modeller under 13B er A100 fortsatt en god, billigere alternativ.

Kan jeg kjøre Llama 3.1 70B på CPU?

Ja, teknisk sett kan du. Med CPU-offloading og nok RAM (minst 128 GB), kan du laste inn og kjøre en 70B-modell på en server-CPU. Men svaret tar 2-5 sekunder per token - det er uakseptabelt for produksjon. Brukere vil forlate deg. Det er bare egnet for testing, utvikling eller enkelt eksperiment. Ikke for en offentlig tjeneste.

Hva er Transformer Engine, og hvorfor er det viktig?

Transformer Engine er en ny funksjon i H100 som automatisk skifter mellom FP8, FP16 og INT8 presisjon under inferens. Det gjør at modellen bruker mindre minne og utfører flere operasjoner per syklus. For modeller som Llama 3.1, Qwen eller Mistral, gir det en 2-6 ganger raskere inferens sammenlignet med A100. Det er ikke bare en oppgradering - det er en ny måte å kjøre store språkmodeller på.

Er det en god idé å bruke A100 i 2026?

Det er bare en god idé hvis du kjører små modeller (under 13B parametere), har begrenset budsjett, og ikke trenger høy parallellitet. For større modeller eller produksjonsbruk, vil A100 bli en flaskehals. Minnebåndbredde er den største begrensningen for moderne LLM-er - og A100 har ikke nok. H100 er fremtiden. A100 er en overgangsløsning.

Hva er forskjellen mellom H100 og H100 NVL?

H100 NVL er en dobbel-GPU-versjon med 188 GB HBM3-minne i ett kart. Den er designet for modeller som er større enn 80 GB - som Qwen-2 110B eller andre modeller med over 100 milliarder parametere. Standard H100 har 80 GB. H100 NVL lar deg kjøre større modeller uten å dele dem på flere enheter - noe som reduserer latens og forenkler infrastrukturen.

Kan jeg bruke AMD MI300X istedenfor H100?

Teknisk sett ja, men det er ikke anbefalt. AMD MI300X er 1,7 ganger raskere enn A100, men bare 1,7 ganger raskere enn H100 - og koster 85% av prisen. Det betyr at du får mindre ytelse per dollar enn med H100. Dessuten støtter de fleste LLM-verktøy (vLLM, TensorRT-LLM) ikke MI300X like godt som NVIDIA. Du vil trenge mer utviklingstid og ha dårligere dokumentasjon.

Hvorfor er CPU-offloading så treigt?

CPU-er er designet for å gjøre en oppgave i gangen - ikke millioner av små beregninger samtidig. LLM-er krever enorme mengder parallelle operasjoner. Når du flytter modellvektene til CPU-minnet, må du konstant flytte data tilbake og fram mellom CPU og GPU - og det er ekstremt treigt. Hvert token blir en reise mellom to ulike verden. Det er som å kjøre en bil med en motor som må stoppe og starte for hvert hjul.

Hva med Google TPU v5p?

TPU v5p er raskere enn H100 for noen modeller - men bare for modeller som er spesialtilpasset til TPU-arkitektur. De fleste LLM-verktøy som vLLM, DeepSpeed og TensorRT-LLM støtter ikke TPU. Du må skrive om hele inferens-pipen din. Det er ikke praktisk for de fleste bedrifter. H100 har et økosystem som fungerer ut av boksen. TPU er et eksperiment - ikke en løsning.

Post Comments (5)

Har prøvd H100 på en 70B-modell i testmiljø, og det er som å gå fra gummibåt til jetmotor. 🚀 200ms per token vs 1,8s på A100? Ikke engang sammenlignbart. Brukere merker forskjellen - og de kommer tilbake.

lol A100 i 2026? 😂 du må va’ på en annen planet. H100 er det eneste som ikke gjør deg til en skamme for din egen tech-stack. CPU-offloading? Hah. Det er som å prøve å kjøre en Tesla med en kanskje-motor. Skriv om deg selv, kamerat.

Det er så mye stress i å velge mellom disse. Jeg prøvde H100, men det føltes som å kjøpe en Lamborghini bare for å kjøre til butikken. A100 er nok for meg. Ikke fordi jeg ikke ønsker raskere - men fordi jeg ikke har noen som venter på svaret i sanne sekunder. Det er bare meg og en kopp kaffe. 😅

Alle snakker om H100 som om det er Gudens egen GPU... men har noen tenkt på at vi kanskje bare har valgt feil filosofi? Hva om vi ikke trenger raskere maskiner - men mindre modeller? Mindre er ikke dårligere. Det er mer menneskelig. H100 er bare en ny form for teknologisk idolatri. 🤔

Har sett folk slite med CPU-offloading i måneder. Det er ikke en løsning - det er en utslett. H100 er den eneste valget som gir deg fred. Ikke bare fordi den er raskere, men fordi den lar deg sove om natten. Ikke fordi du må fikse ting. Fordi den bare fungerer. Ikke noe mystikk. Bare god teknikk. 👍